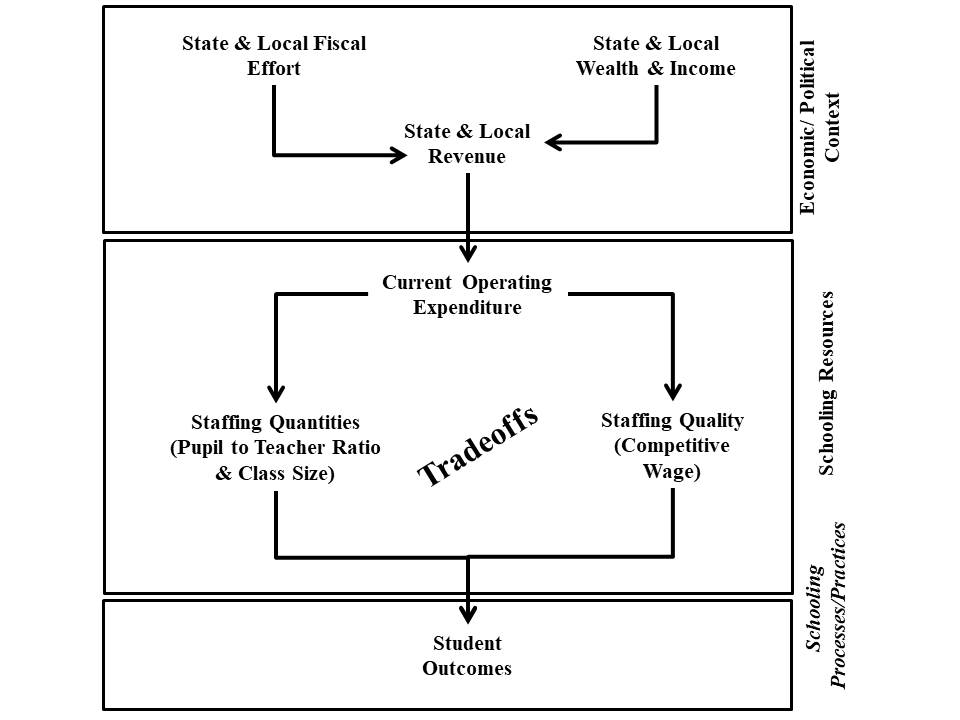

Basic Model: From Revenue Sources to Classroom Resources

The basic model linking available revenues, schooling resources and ultimately student outcomes remains relatively simple. Setting aside federal revenue for the moment, which is about 10% of education revenue on average, Figure 12 illustrates that the amount of revenue state and local education systems tend to have is a function of both a) fiscal capacity and b) fiscal effort. On average, some states put forth more effort than others and some states have more capacity to raise revenue than others. These differences lead to vast differences in school funding across states, and subsequently to vast differences in classroom resources. Federal aid is far from sufficient to close these gaps. Similarly, within some states, local districts have widely varied capacity to raise revenue for their schools, and some differences in revenues also result from differences in local effort. State effort to close these gaps varies widely.

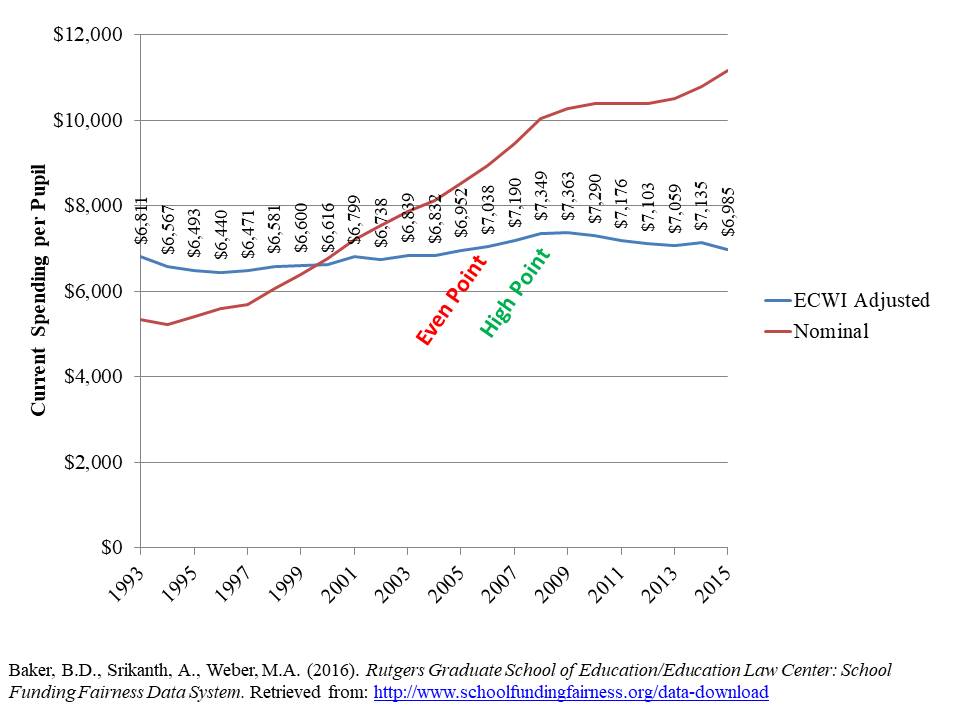

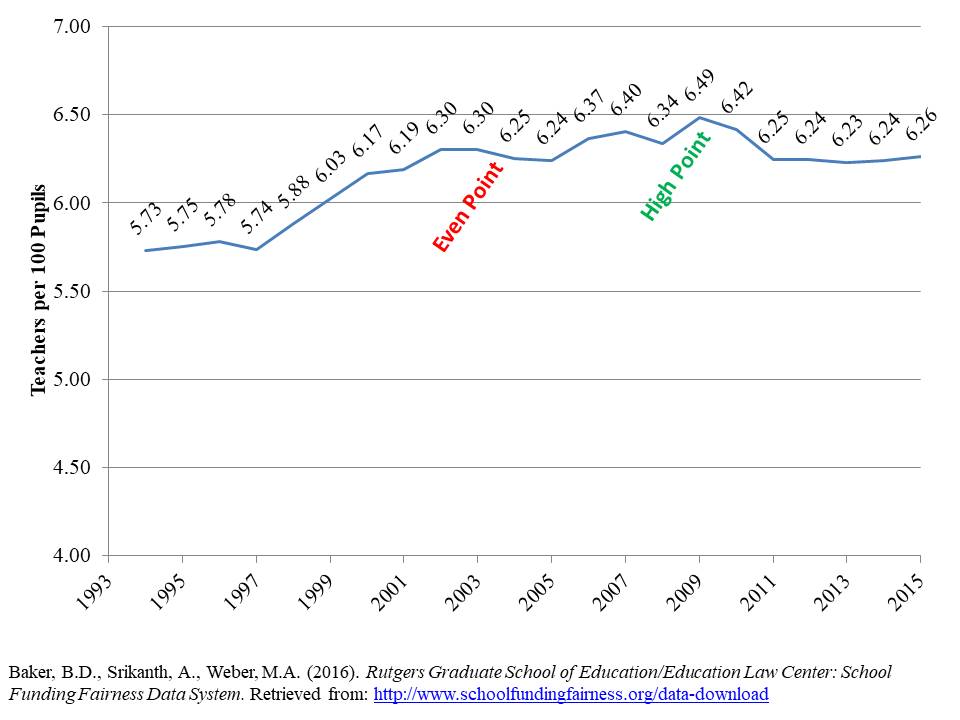

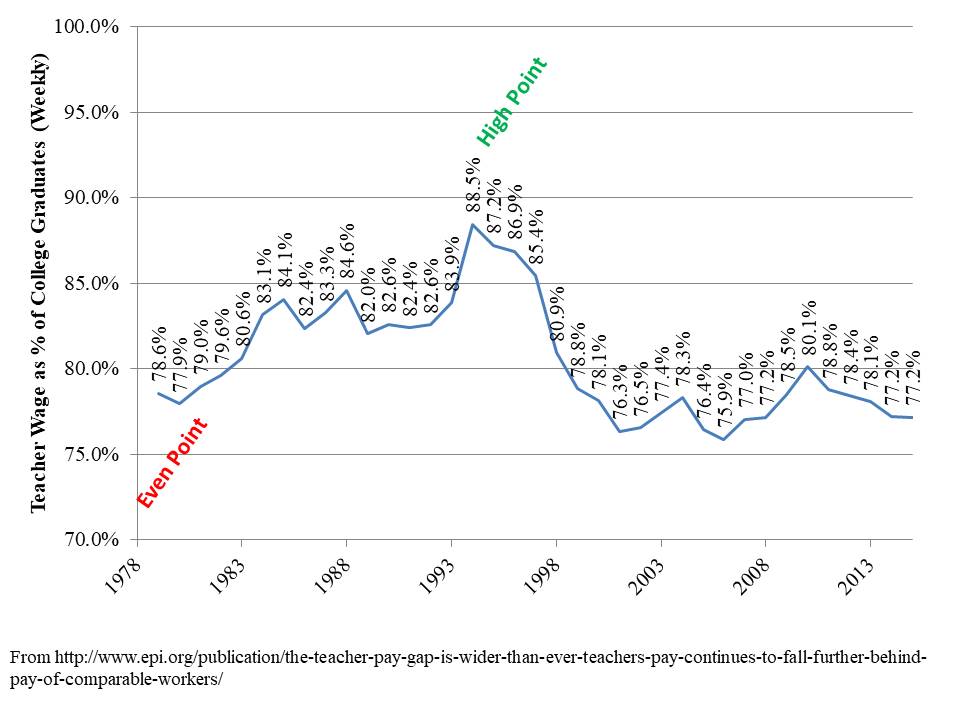

Whether at the state level on average, or at the local level, the amount of revenue available dictates directly the amount that can be spent. Current operating expenditures are balanced primarily between the quantity of staff employed, often reflected in pupil to teacher ratios or class sizes, and the compensation of those employees including salaries and benefits. Therein lies the primary tradeoff to be made in school district planning and budgeting. Yes, one could trade off teachers for computers (or “tech-based solutions”), but most schools don’t and those that do have not produced impressive results. At any given budget constraint, staffing quantities can be increased at the expense of wages, or wages increased at the expense of quantities. The combination of the two must be sufficient to achieve desired outcomes.

Figure 12

These connections matter from an equity perspective, from an adequacy perspective and from productivity and efficiency perspectives.

From an equity perspective, if revenue sources remain unequal, leading to unequal expenditures, those inequities will lead to unequal staffing ratios and/or wages, resulting in unequal school quality and eventually outcomes. The argument that schools and districts with less money simply need to use it better – figure out how to hire better teachers in each position, with lower total payroll, is an inequitable requirement. This argument is based on loosely on the popular book Moneyball, which touted the success of the Oakland Athletics, applying new metrics to recruit undervalued players. But this argument forgets the book’s subtitle “the art of winning an unfair game,” a reference to the difficulties of small market teams competing successfully in a sport which only loosely regulates payroll parity. Clearly, baseball would be a fairer game if all teams could fund equal total payroll. While it’s up to Major League Baseball to decide whether greater parity across teams – a fairer game – is preferable (and profitable), public education shouldn’t be an unfair game.

From an adequacy perspective, sufficient funding is a necessary condition for hiring sufficient quantities of qualified personnel, to achieve sufficient outcomes. Finally, from a productivity and efficiency perspective, we do know that human resource intensive strategies tend to be productive, and, as of yet, we have little evidence of substantial efficiency gains from technological substitutions resulting in substantial reduction of human resources. Resource constrained schools and districts which already have large class sizes and non-competitive wages are unable to trade off one for the other. Put bluntly, if you don’t have it, you can’t spend it.

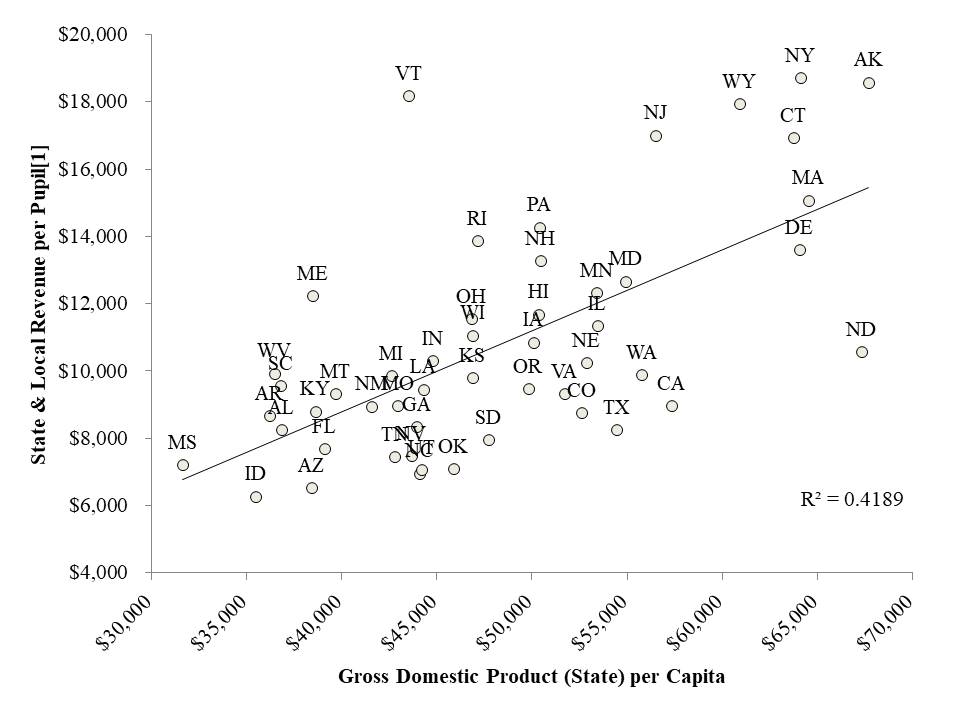

Variations in Revenues and Classroom Resources

Across states, average state and local revenues are partly a function of differences in “effort” and partly a function of differences in “capacity.” [i] Figure 13 shows the relationship across states, in 2015 between our measure of “capacity” – gross domestic product (GDP state) – and total state and local revenues per pupil. Differences in state GDP explain about 42% of the differences in state and local revenue. Higher capacity states like Massachusetts, Connecticut, New York, Wyoming and Alaska tend to raise more revenue for schools. Vermont stands out as a state where capacity is below the median but revenue is very high. Mississippi stands out as a state with very low capacity and very low revenue.

Figure 13

[1] per pupil revenue projected for district with >2,000 pupils, average population density, average labor costs and 20% children in poverty[ii]

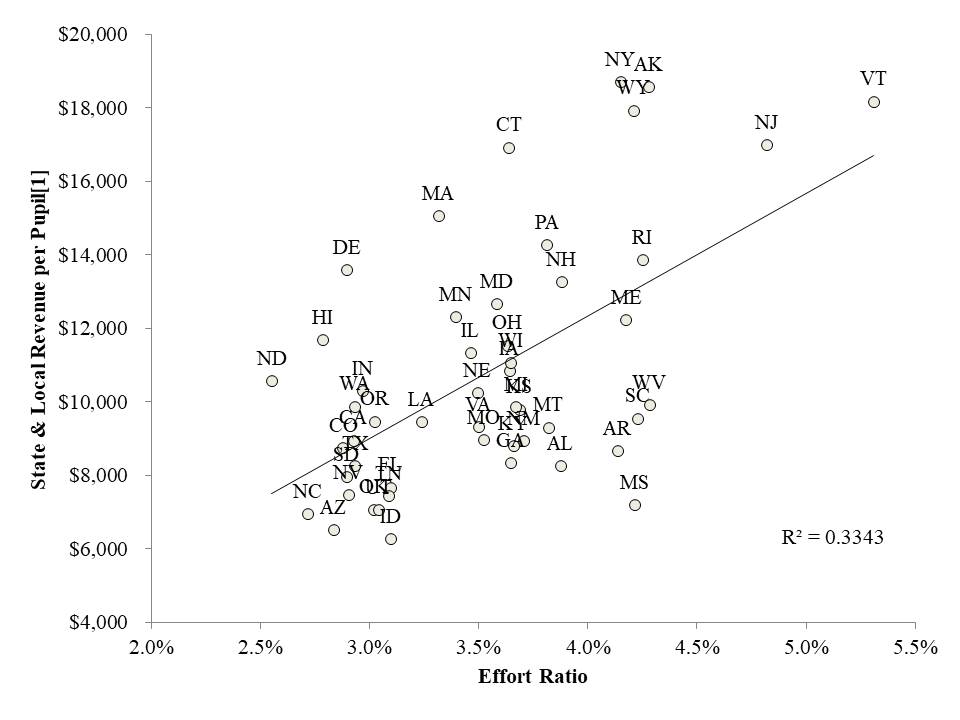

Figure 14 shows the relationship between a common measure of “effort” and state and local revenues. The effort measure is the ratio of state and local revenues to gross state product. That is, what percent of economic capacity are states spending on schools? Differences in effort explain about 1/3 of differences in state and local revenue per pupil. Despite having low capacity, Vermont has very high effort which results in high revenue levels. Other higher capacity states like Connecticut, Massachusetts and New York are able to raise relatively high revenue levels with much lower effort. Mississippi by contrast leverages above average effort, but because of its very low capacity, simply can’t dig itself out. Other states like Arizona and North Carolina, which have much greater capacity than Mississippi, simply choose not to apply effort toward raising school revenues.

Figure 14

[1] per pupil revenue projected for district with >2,000 pupils, average population density, average labor costs and 20% children in poverty

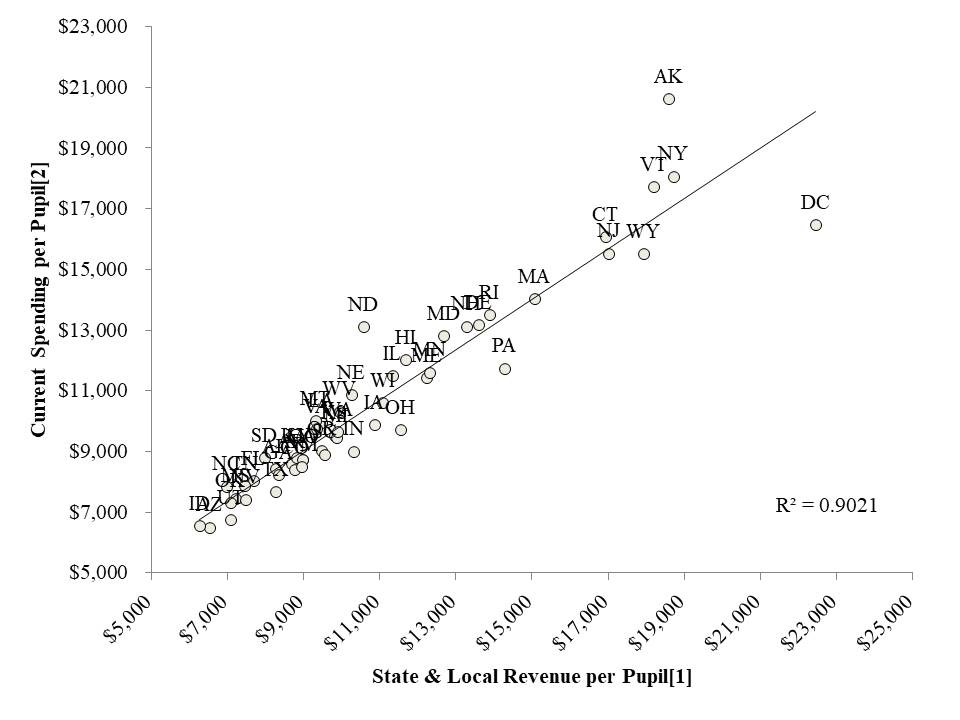

Figure 15 displays the rather obvious relationship that, the more state and local revenue per pupil raised in a state, the more that is spent per pupil.

Figure 15

[1] per pupil revenue projected for district with >2,000 pupils, average population density, average labor costs and 20% children in poverty

[2] current spending per pupil projected for district with >2,000 pupils, average population density, average labor costs and 20% children in poverty

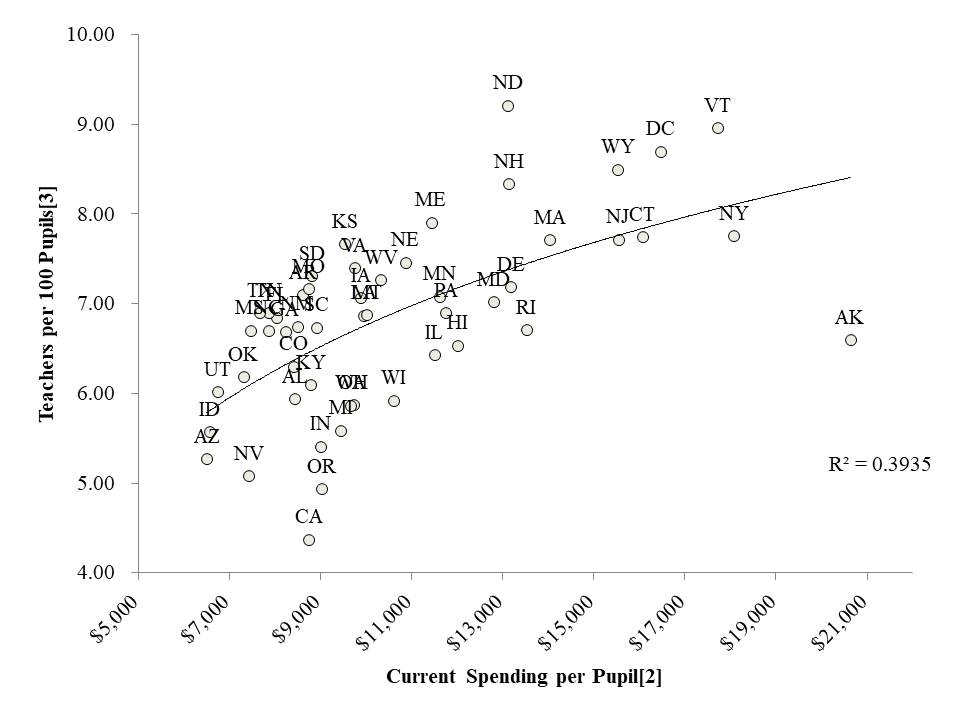

Figure 16 shows that greater per pupil spending generally leads to more teachers per 100 pupils. Very low spending states (also low effort) like Arizona and Nevada tend to have very low staffing ratios compared to other states.

Figure 16

[2] current spending per pupil projected for district with >2,000 pupils, average population density, average labor costs and 20% children in poverty

[3] teachers per 100 pupils projected for district with >2,000 pupils, average population density, average labor costs and 20% children in poverty

Figure 17 shows that states spending more per pupil, on average, tend to have more competitive teacher wages. Here, the indicator of competitive wages is the ratio of salaries of elementary and secondary teachers to same age, similarly educated non-teachers in the same labor market within each state. That is, the competitiveness of teacher wages is relative. As indicated by decades of teacher labor market research, the relative competitiveness of teacher wages influences the quality of candidates who enter teaching as a profession.[iii] Teacher compensation is especially poor in states like Arizona, which has among the lowest per pupil spending in the nation. By contrast, teacher compensation is highly competitive in Vermont and Wyoming, both higher spending states, but also states where non-teacher compensation is not particularly high, compared to New York, New Jersey and Connecticut.

Figure 17

District Spending Determines School Resources (Illinois Examples)

Similar disparities play out at the local level, especially in those states which have done the least to resolve disparities between rich and poor districts. Year after year, a report on which I am an author – Is School Funding Fair? – identifies Illinois and Pennsylvania as among the most disparate states in the nation. Specifically, in these two states, districts serving high poverty student populations continue to have far fewer resources per pupil than their more advantaged counterparts. Here, I use Illinois as an example of the resulting relationship between a) district level spending variation, b) school site spending variation, and c) on-the-ground resources including staffing assignments per pupil. These connections seem obvious. If a district has sufficient revenues, those revenues can be used to support the schools in that district, leading to more and better paid teachers. If a district doesn’t have the resources, neither will the schools in that district.

I raise this issue because, over the past decade, federal policy (and the think tanks which inform federal policy) have placed disproportionate emphasis on the need to resolve inequities in spending across (particularly staffing expenditures) schools within districts, while ignoring inequities in spending between districts. But, especially in highly inequitable states like Illinois, resolving between school inequities in poorly funded school districts is akin to re-arranging deck chairs on the Costa Concordia.[iv]

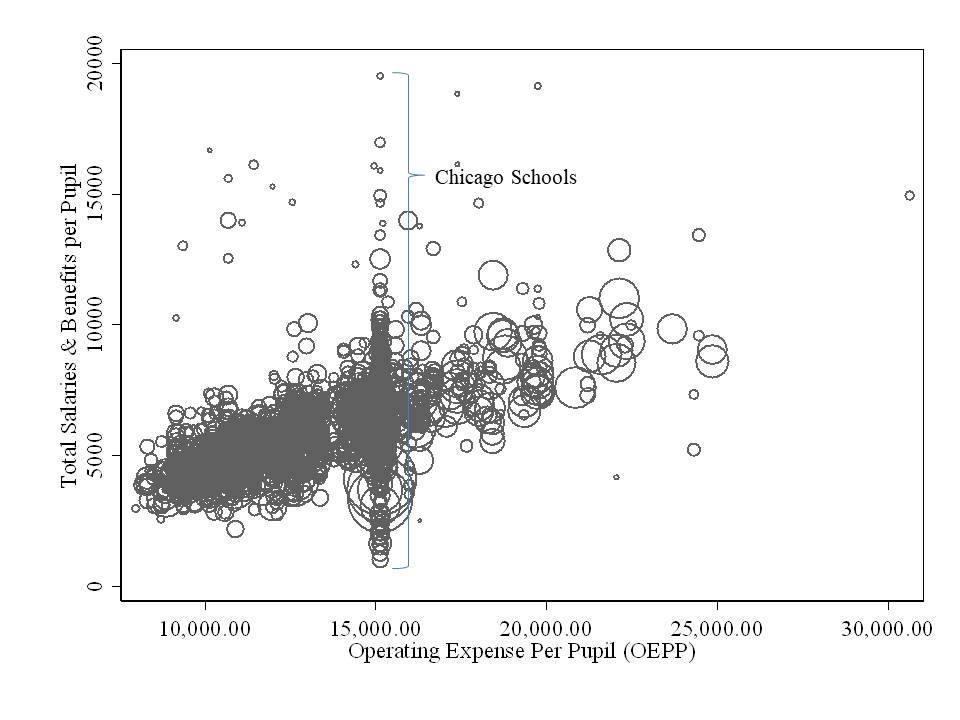

Figure 18 shows the relationship between district level operating expenditures per pupil (horizontal axis) and school site staffing expense per pupil on the vertical axis, for the Chicago metropolitan area in 2012. Only a few districts have large numbers of schools across which resources might vary. The City of Chicago schools appear at approximately the $15,000 per pupil point on the horizontal axis as a vertical pattern of circles. Circle size indicates school enrollment. While there appears to be substantial variation in resources across Chicago schools, much (about half) of that variation is a function of differences in student populations (special education in particular) and grade ranges served.

On average, Figure 18 shows that schools in districts that spend more per pupil overall, spend more per pupil at the school level on staffing. 44% of the variation in staffing expenditure across schools is driven by spending differences across districts. And about half of the remaining differences in staffing expenditure across schools are driven by the distribution of special education populations across schools and district targeting of staff to serve those children.[v] That is, much of the within district variation that does exist is rational. Resources that flow to districts pay for staff that work in schools. It really is that simple. If the district has more revenue coming in, it can spend more, and that spending shows up in the form of more and better paid teachers in schools.

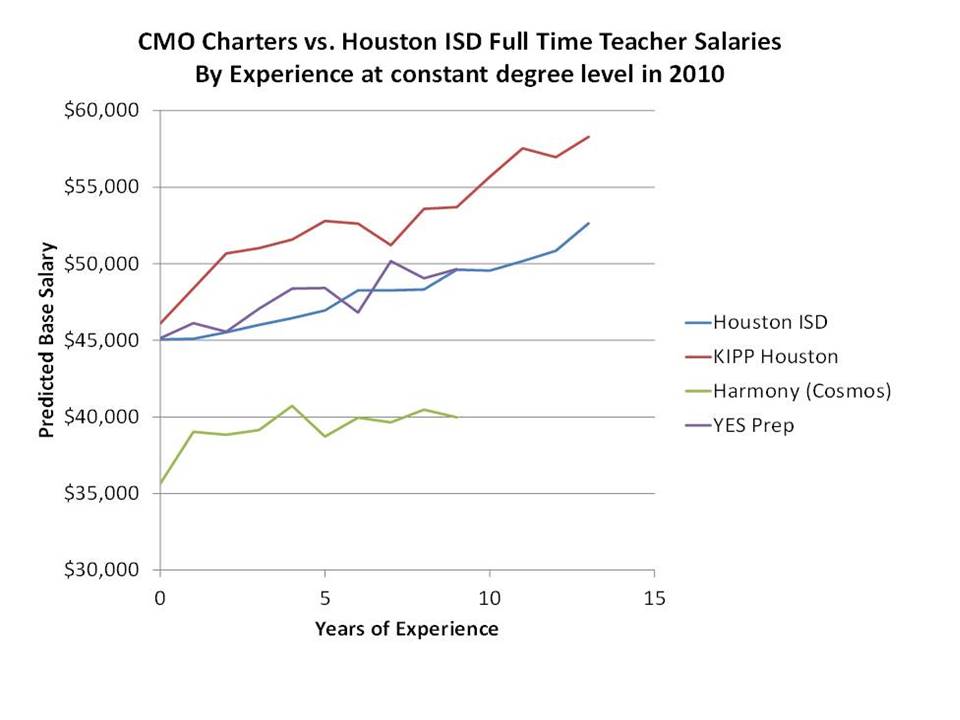

Figure 18

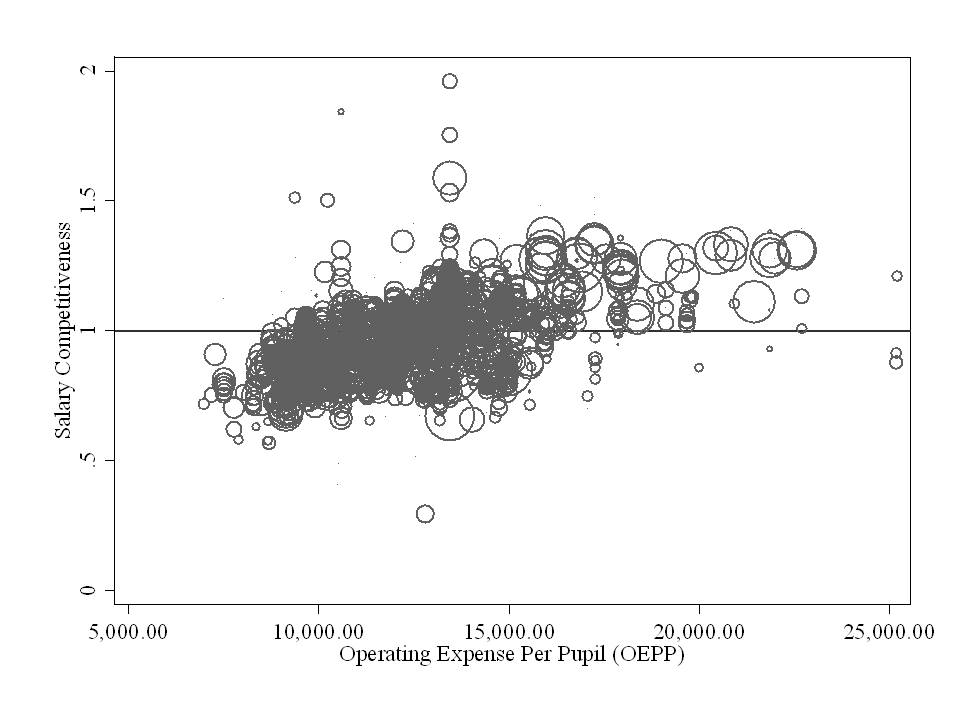

Figure 19 shows how, in the Chicago metropolitan area, differences in district level operating expenditures lead to differences in competitive wages for teachers. Here, competitiveness of wages are measured in terms of the salaries of teachers with specific numbers of years of experience and degree levels (contract hours and days per year, grade level taught, and job assignment), compared to their peers in other schools in the Chicago metro area. Salary competitiveness measured in this way reflects the recruitment and retention potential of districts, for those who have already chosen to teach. Salary differentials within teaching may influence teacher sorting across schools and districts.

Figure 19 shows that teachers in low spending districts tend to have salaries at about 75% of the average teacher with similar credentials in a similar assignment. Teachers in high spending districts tend to have salaries around 25% higher than the average teacher in a similar position with similar credentials.

Figure 19

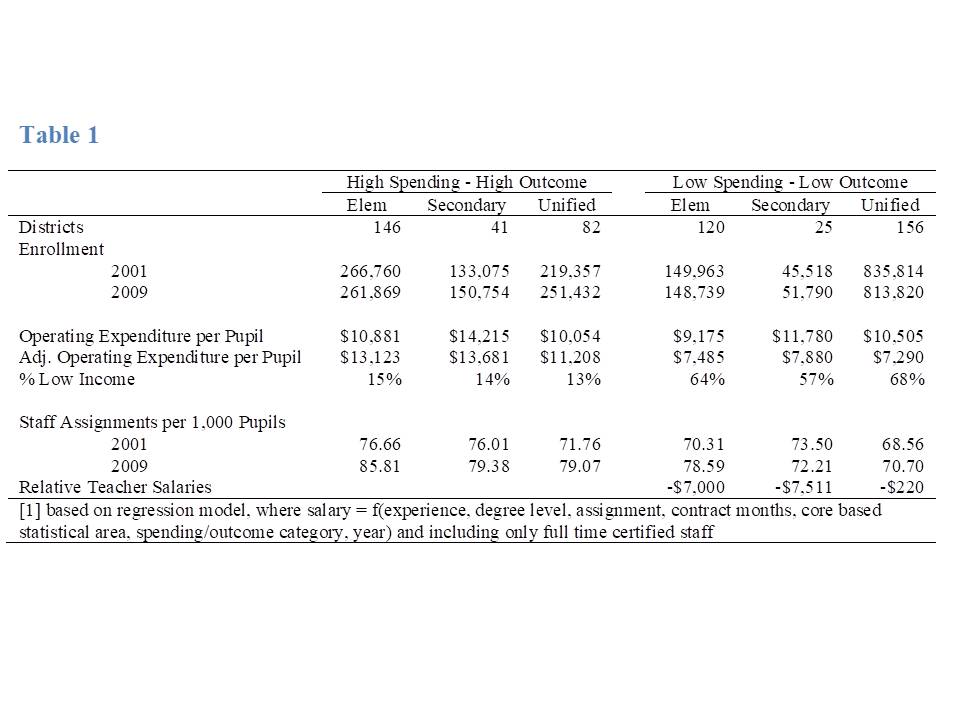

Finally, Table 1 summarizes features of Illinois school districts which I identified in a paper in 2012[vi] as falling into quadrants where the upper right (advantaged) quadrant were districts with high spending and high outcomes and the lower left (disadvantaged) were those with low spending and low outcomes. To identify advantaged and disadvantaged school districts, spending was adjusted for the costs of providing equal educational opportunity to all students by methods discussed in a forthcoming post. Nominal and adjusted spending levels are reported in Table 1.

In my analysis, there were 146 high spending high outcome elementary districts serving over 250 thousand children and 120 low spending low outcome districts serving about 150 thousand children. There were 41 high spending high outcome secondary districts serving up to 150 thousand children and 25 low spending low outcome secondary districts serving about 50 thousand children. For unified districts, there were 82 that were high spending and high outcome, serving 250 thousand children and 156 that are low spending with low outcomes, serving over 800 thousand children, with about half of those children attending Chicago Public Schools.

Even without any adjustment for costs or needs, the average per pupil operating expenditures are lower in low spending, low outcome districts. The percent of children who are low income is substantially higher in low spending, low outcome districts. Table 1 shows that the district operating expenditure advantages of the high resource – high outcome district schools translate directly to a) more staff per pupil and b) better paid staff per pupil. In 2009, high resource schools had 86 and 79 teacher assignments per 1000 pupils in elementary and secondary schools respectively, compared to 79 and 72 for low resource schools. Further, for teachers with similar training and experience in similar assignments, teachers in low resource schools were paid $7,000 to $7,500 less in salary. That is, schools in financially advantaged districts have both more staff per pupil and are able to pay them more, while serving far less needy student population.

The take-home point of this post is that on average, the financial resources available to schools generally translate to human resources. Financial resources translate to the quantities of staff which can be hired or retained and they translate to the wages that can be paid. And both of these matter, as will be discussed more extensively in future posts. To summarize:

- Schooling is human resource intensive, whether traditional public, charter or private;

- When schools have more money, they invest it in more staff and better paid staff and when they don’t, they can’t!

More later on whether charter schools and private schools have much to offer in the way of technological innovation, substitution and creative resource allocation.

NOTES

[i] Baker, B. D. (2016). School Finance & the Distribution of Equal Educational Opportunity in the Postrecession US. Journal of Social Issues, 72(4), 629-655.

[ii] Baker, B.D., Srikanth, A., Weber, M.A. (2016). Rutgers Graduate School of Education/Education Law Center: School Funding Fairness Data System. Retrieved from: http://www.schoolfundingfairness.org/data-download

[iii] Baker, B. D. (2016). Does money matter in education?. Albert Shanker Institute.

[iv] Baker, B. D. (2012). Rearranging deck chairs in Dallas: Contextual constraints and within-district resource allocation in urban Texas school districts. Journal of Education Finance, 37(3), 287-315.

[v] Baker, B.D., Srikanth, A., Weber, M. (2017) The Incompatibility of Federal Policy Preferences for Charter School Expansion and Within District Equity.

[vi] Baker, B. D. (2012, March). Unpacking the consequences of disparities in school district financial inputs: Evidence from staffing data in New York and Illinois. In annual meeting of the Association for Education Finance and Policy, Boston, MA.