This post is taken from a forthcoming report in which I summarize literature related to state school finance reforms and explore relationships between changing distributions of funding and distributions of tangible classroom level resources. The newly released Jackson, Johnson and Persico NBER paper speaks to similar issues and is included in the discussion that follows:

==========

In a comprehensive review of literature addressing the question “Does Money Matter in Education?” in 2012, Baker concluded:

To be blunt, money does matter. Schools and districts with more money clearly have greater ability to provide higher-quality, broader, and deeper educational opportunities to the children they serve. Furthermore, in the absence of money, or in the aftermath of deep cuts to existing funding, schools are unable to do many of the things they need to do in order to maintain quality educational opportunities. Without funding, efficiency tradeoffs and innovations being broadly endorsed are suspect. One cannot tradeoff spending money on class size reductions against increasing teacher salaries to improve teacher quality if funding is not there for either – if class sizes are already large and teacher salaries non-competitive. While these are not the conditions faced by all districts, they are faced by many.

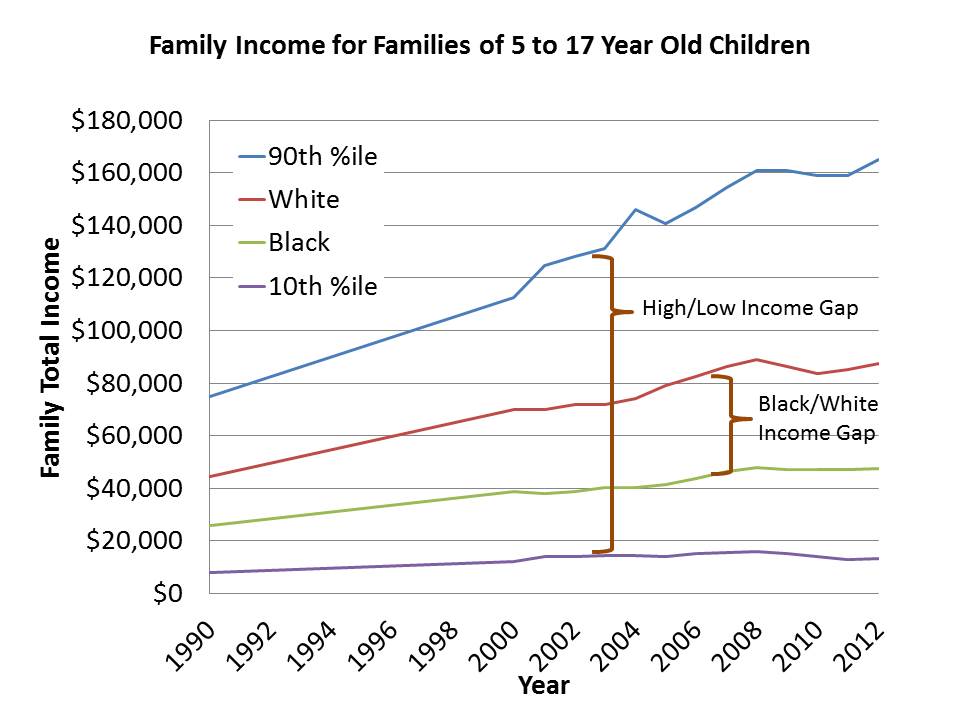

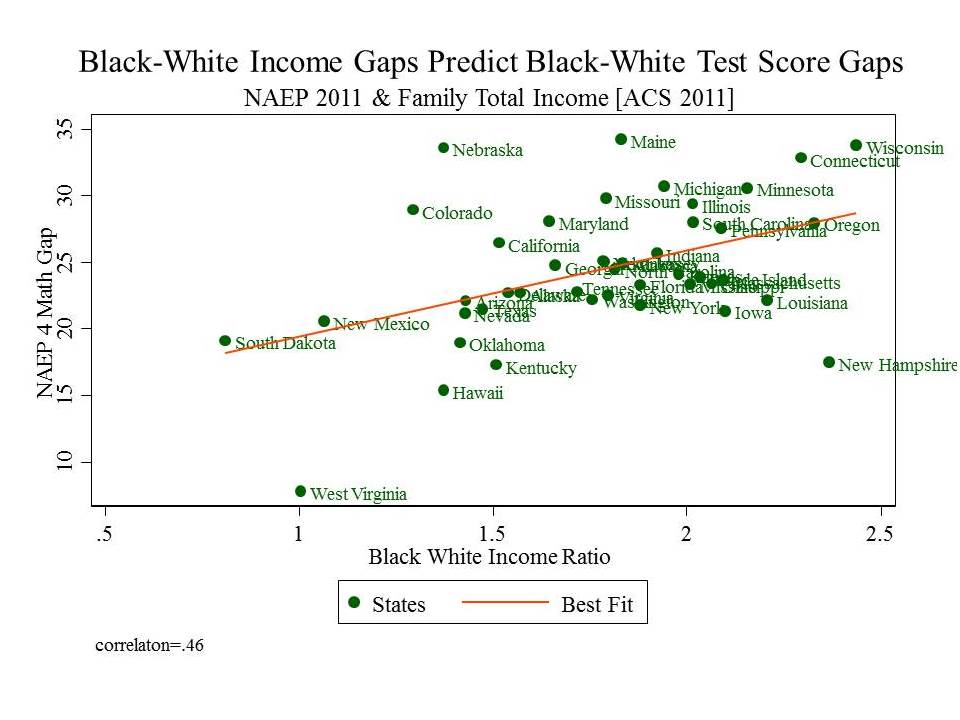

Building on the findings and justifications provided by Baker (2012), we offer Figure 4 as a simple model of the relationship of schooling resources to children’s measurable school achievement outcomes. First, the fiscal capacity of states – their wealth and income – does affect their ability to finance public education systems. But, as we have shown in related research, on which we expand herein, the effort put forth in state and local tax policy plays an equal role.

Figure 4

The amount of state and local revenue raised drives the majority of current spending of local public school districts, because federal aid constitutes such a relatively small share. Further, the amount of money a district is able spend on current operations determines the staffing ratios, class sizes and wages a local public school district is able to pay. Indeed, there are tradeoffs to be made between staffing ratios and wage levels. Finally, as noted above, a sizable body of research illustrates the connection between staffing qualities and quantities and student outcomes.

The connections laid out in this model seem rather obvious. How much you raise dictates how much you can spend. How much you spend – in a labor intensive industry dictates how many individuals you can employ, the wage you can pay them, and in turn the quality of individuals you can recruit and retain. But in this modern era of resource-free school “reforms” the connections between revenue, spending and real, tangible resources are often ignored, or worse, argued to be irrelevant. A common theme advanced in modern political discourse is that all schools and districts already have more than enough money to get the job done. They simply need to use it more wisely and adjust to the “new normal.”[i]

But, on closer inspection of the levels of funding available across states and local public school districts within states, this argument rings hollow. To illustrate, we spend a significant portion of this report statistically documenting these connections. First, we take a quick look at existing literature on the relevance of state school finance systems, and reform of those systems for improving the level and distribution of student outcomes, and literature on the importance of class sizes and teacher wages for improving school quality as measured by student outcomes.

Equitable and Adequate Funding

There exists an increasing body of evidence that substantive and sustained state school finance reforms matter for improving both the level and distribution of short-term and long-run student outcomes. A few studies have attempted to tackle school finance reforms broadly applying multi-state analyses over time. Card and Payne (2002) found “evidence that equalization of spending levels leads to a narrowing of test score outcomes across family background groups.”[ii] (p. 49) Most recently, Jackson, Johnson & Persico (2015) evaluated long-term outcomes of children exposed to court-ordered school finance reforms, finding that “a 10 percent increase in per-pupil spending each year for all twelve years of public school leads to 0.27 more completed years of education, 7.25 percent higher wages, and a 3.67 percentage-point reduction in the annual incidence of adult poverty; effects are much more pronounced for children from low-income families.”(p. 1) [iii]

Numerous other researchers have explored the effects of specific state school finance reforms over time. [iv] Several such studies provide compelling evidence of the potential positive effects of school finance reforms. Studies of Michigan school finance reforms in the 1990s have shown positive effects on student performance in both the previously lowest spending districts, [v] and previously lower performing districts. [vi] Similarly, a study of Kansas school finance reforms in the 1990s, which also involved primarily a leveling up of low-spending districts, found that a 20 percent increase in spending was associated with a 5 percent increase in the likelihood of students going on to postsecondary education.[vii]

Three studies of Massachusetts school finance reforms from the 1990s find similar results. The first, by Thomas Downes and colleagues found that the combination of funding and accountability reforms “has been successful in raising the achievement of students in the previously low-spending districts.” (p. 5)[viii] The second found that “increases in per-pupil spending led to significant increases in math, reading, science, and social studies test scores for 4th- and 8th-grade students.”[ix] The most recent of the three, published in 2014 in the Journal of Education Finance, found that “changes in the state education aid following the education reform resulted in significantly higher student performance.”(p. 297)[x] Such findings have been replicated in other states, including Vermont. [xi]

On balance, it is safe to say that a sizeable and growing body of rigorous empirical literature validates that state school finance reforms can have substantive, positive effects on student outcomes, including reductions in outcome disparities or increases in overall outcome levels.[xii]

Class Sizes and Teacher Salaries

The premise that money matters for improving school quality is grounded in the assumption that having more money provides schools and districts the opportunity to improve the qualities and quantities of real resources. Jackson, Johnson and Persico (2015) explain that the spending increases they found to be associated with long term benefits “were associated with sizable improvements in measured school quality, including reductions in student-to-teacher ratios, increases in teacher salaries, and longer school years.” (p. 1)

The primary resources involved in the production of schooling outcomes are human resources – or quantities and qualities of teachers, administrators, support and other staff in schools. Quantities of school staff are reflected in pupil to teacher ratios and average class sizes. Reduction of class sizes or reductions of overall pupil to staff ratios require additional staff, thus additional money, assuming the wages and benefits for additional staff remain constant. Qualities of school staff depend in part on the compensation available to recruit and retain them – specifically salaries and benefits, in addition to working conditions. Notably, working conditions may be reflected in part through measures of workload, like average class sizes, as well as the composition of the student population.

A substantial body of literature has accumulated to validate the conclusion that both teachers’ overall wages and relative wages affect the quality of those who choose to enter the teaching profession, and whether they stay once they get in. For example, Murnane and Olson (1989) found that salaries affect the decision to enter teaching and the duration of the teaching career,[xiii] while Figlio (1997, 2002) and Ferguson (1991) concluded that higher salaries are associated with more qualified teachers.[xiv] In addition, more recent studies have tackled the specific issues of relative pay noted above. Loeb and Page showed that:

“Once we adjust for labor market factors, we estimate that raising teacher wages by 10 percent reduces high school dropout rates by 3 percent to 4 percent. Our findings suggest that previous studies have failed to produce robust estimates because they lack adequate controls for non-wage aspects of teaching and market differences in alternative occupational opportunities.”[xv]

In short, while salaries are not the only factor involved, they do affect the quality of the teaching workforce, which in turn affects student outcomes.

Research on the flip side of this issue – evaluating spending constraints or reductions – reveals the potential harm to teaching quality that flows from leveling down or reducing spending. For example, David Figlio and Kim Rueben (2001) note that, “Using data from the National Center for Education Statistics we find that tax limits systematically reduce the average quality of education majors, as well as new public school teachers in states that have passed these limits.”[xvi]

Salaries also play a potentially important role in improving the equity of student outcomes. While several studies show that higher salaries relative to labor market norms can draw higher quality candidates into teaching, the evidence also indicates that relative teacher salaries across schools and districts may influence the distribution of teaching quality. For example, Ondrich, Pas and Yinger (2008) “find that teachers in districts with higher salaries relative to non-teaching salaries in the same county are less likely to leave teaching and that a teacher is less likely to change districts when he or she teaches in a district near the top of the teacher salary distribution in that county.”[xvii]

In addition, ample research indicates that children in smaller classes achieve better outcomes, both academic and otherwise, and that class size reduction can be an effective strategy for closing racial or socio-economic achievement gaps. [xviii] While it’s certainly plausible that other uses of the same money might be equally or even more effective, there is little evidence to support this. For example, while we are quite confident that higher teacher salaries may lead to increases in the quality of applicants to the teaching profession and increases in student outcomes, we do not know whether the same money spent toward salary increases would achieve better or worse outcomes if it were spent toward class size reduction. Indeed, some have raised concerns that large scale-class size reductions can lead to unintended labor market consequences that offset some of the gains attributable to class size reduction (such as the inability to recruit enough fully qualified teachers).[xix] And many, over time, have argued the need for more precise cost/benefit analysis. [xx] Still, the preponderance of existing evidence suggests that the additional resources expended on class size reductions do result in positive effects.

Both reductions to class sizes and improvements to competitive wages can yield improved outcomes, but the efficiency gains of choosing one strategy over the other are unclear, and local public school districts rarely have complete flexibility to make tradeoffs.[xxi] Class size reduction may be constrained by available classrooms. Smaller class sizes and reduced total student loads are a relevant working condition simultaneously influencing teacher recruitment and retention.[xxii] That is, providing smaller classes may partly offset the need for higher wages for recruiting or retaining teachers. High poverty schools require a both/and rather than either/or strategy when it comes to smaller classes and competitive wages.

[i] Baker, B., & Welner, K. G. (2012). Evidence and rigor scrutinizing the rhetorical embrace of evidence-based decision making. Educational Researcher, 41(3), 98-101.

[ii] Card, D., and Payne, A. A. (2002). School Finance Reform, the Distribution of School Spending, and the Distribution of Student Test Scores. Journal of Public Economics, 83(1), 49-82.

[iii] Jackson, C. K., Johnson, R., & Persico, C. (2014). The Effect of School Finance Reforms on the Distribution of Spending, Academic Achievement, and Adult Outcomes (No. w20118). National Bureau of Economic Research.

Jackson, C. K., Johnson, R., & Persico, C. (2015). The Effects of School Spending on Educational and Economic Outcomes: Evidence from School Finance Reforms (No. w 20847) National Bureau of Economic Research.

[iv] Figlio (2004) explains that the influence of state school finance reforms on student outcomes is perhaps better measured within states over time, explaining that national studies of the type attempted by Card and Payne confront problems of a) the enormous diversity in the nature of state aid reform plans, and b) the paucity of national level student performance data.

Figlio, D. N. (2004) Funding and Accountability: Some Conceptual and Technical Issues in State Aid Reform. In Yinger, J. (Ed.) p. 87-111 Helping Children Left Behind: State Aid and the Pursuit of Educational Equity. MIT Press.

[v] Roy, J. (2011). Impact of school finance reform on resource equalization and academic performance: Evidence from Michigan. Education Finance and Policy, 6(2), 137-167.

Roy (2011) published an analysis of the effects of Michigan’s 1990s school finance reforms which led to a significant leveling up for previously low-spending districts. Roy, whose analyses measure both whether the policy resulted in changes in funding and who was affected, found that “Proposal A was quite successful in reducing interdistrict spending disparities. There was also a significant positive effect on student performance in the lowest-spending districts as measured in state tests.” (p. 137)

[vi] Papke, L. (2005). The effects of spending on test pass rates: evidence from Michigan. Journal of Public Economics, 89(5-6). 821-839.

Hyman, J. (2013). Does Money Matter in the Long Run? Effects of School Spending on Educational Attainment. http://www-personal.umich.edu/~jmhyman/Hyman_JMP.pdf.

Papke (2001), also evaluating Michigan school finance reforms from the 1990s, found that “increases in spending have nontrivial, statistically significant effects on math test pass rates, and the effects are largest for schools with initially poor performance.” (p. 821)

Most recently, Hyman (2013) also found positive effects of Michigan school finance reforms in the 1990s, but raised some concerns regarding the distribution of those effects. Hyman found that much of the increase was targeted to schools serving fewer low income children. But, the study did find that students exposed to an additional “12%, more spending per year during grades four through seven experienced a 3.9 percentage point increase in the probability of enrolling in college, and a 2.5 percentage point increase in the probability of earning a degree.” (p. 1)

[vii] Deke, J. (2003). A study of the impact of public school spending on postsecondary educational attainment using statewide school district refinancing in Kansas, Economics of Education Review, 22(3), 275-284. (p. 275)

[viii] Downes, T. A., Zabel, J., and Ansel, D. (2009). Incomplete Grade: Massachusetts Education Reform at 15. Boston, MA. MassINC.

[ix] Guryan, J. (2001). Does Money Matter? Estimates from Education Finance Reform in Massachusetts. Working Paper No. 8269. Cambridge, MA: National Bureau of Economic Research.

“The magnitudes imply a $1,000 increase in per-pupil spending leads to about a third to a half of a standard-deviation increase in average test scores. It is noted that the state aid driving the estimates is targeted to under-funded school districts, which may have atypical returns to additional expenditures.” (p. 1)

[x] Nguyen-Hoang, P., & Yinger, J. (2014). Education Finance Reform, Local Behavior, and Student Performance in Massachusetts. Journal of Education Finance, 39(4), 297-322.

[xi] Downes had conducted earlier studies of Vermont school finance reforms in the late 1990s (Act 60). In a 2004 book chapter, Downes noted “All of the evidence cited in this paper supports the conclusion that Act 60 has dramatically reduced dispersion in education spending and has done this by weakening the link between spending and property wealth. Further, the regressions presented in this paper offer some evidence that student performance has become more equal in the post-Act 60 period. And no results support the conclusion that Act 60 has contributed to increased dispersion in performance.” (p. 312)

Downes, T. A. (2004). School Finance Reform and School Quality: Lessons from Vermont. In Yinger, J. (Ed.), Helping Children Left Behind: State Aid and the Pursuit of Educational Equity. Cambridge, MA: MIT Press.

[xii] Indeed, this point is not without some controversy, much of which is readily discarded. Second-hand references to dreadful failures following massive infusions of new funding can often be traced to methodologically inept, anecdotal tales of desegregation litigation in Kansas City, Missouri, or court-ordered financing of urban districts in New Jersey.

Baker, B. D., & Welner, K. G. (2011). School finance and courts: Does reform matter, and how can we tell. Teachers College Record, 113(11), 2374-2414.

Two reports from Cato Institute are illustrative (Ciotti, 1998, Coate & VanDerHoff, 1999).

Ciotti, P. (1998). Money and School Performance: Lessons from the Kansas City Desegregations Experience. Cato Policy Analysis #298.

Coate, D. & VanDerHoff, J. (1999). Public School Spending and Student Achievement: The Case of New Jersey. Cato Journal, 19(1), 85-99.

Hanushek and Lindseth (2009) provide a similar anecdote-driven approach in which they dedicate a chapter of a book to proving that court-ordered school funding reforms in New Jersey, Wyoming, Kentucky, and Massachusetts resulted in few or no measurable improvements. However, these conclusions are based on little more than a series of graphs of student achievement on the National Assessment of Educational Progress in 1992 and 2007 and an untested assertion that, during that period, each of the four states infused substantial additional funds into public education in response to judicial orders. That is, the authors merely assert that these states experienced large infusions of funding, focused on low income and minority students, within the time period identified. They necessarily assume that, in all other states which serve as a comparison basis, similar changes did not occur. Yet they validate neither assertion. Baker and Welner (2011) explain that Hanushek and Lindseth failed to even measure whether substantive changes had occurred to the level or distribution of school funding as well as when and for how long. In New Jersey, for example, infusion of funding occurred from 1998 to 2003 (or 2005), thus Hanushek and Lindseth’s window includes 6 years on the front end where little change occurred (When?). Kentucky reforms had largely faded by the mid to late 1990s, yet Hanushek and Lindseth measure post reform effects in 2007 (When?). Further, in New Jersey, funding was infused into approximately 30 specific districts, but Hanushek and Lindseth explore overall changes to outcomes among low-income children and minorities using NAEP data, where some of these children attend the districts receiving additional support but many did not (Who?). In short the slipshod comparisons made by Hanushek and Lindseth provide no reasonable basis for asserting either the success or failures of state school finance reforms. Hanushek (2006) goes so far as to title the book “How School Finance Lawsuits Exploit Judges’ Good Intentions and Harm Our Children.” The premise that additional funding for schools often leveraged toward class size reduction, additional course offerings or increased teacher salaries, causes harm to children is, on its face, absurd. And the book which implies as much in its title never once validates that such reforms ever do cause harm. Rather, the title is little more than a manipulative attempt to convince the non-critical spectator who never gets past the book’s cover to fear that school finance reforms might somehow harm children. The book also includes two examples of a type of analysis that occurred with some frequency in the mid-2000s which also had the intent of showing that school funding doesn’t matter. These studies would cherry pick anecdotal information on either or both a) poorly funded schools that have high outcomes or b) well-funded schools that have low outcomes (see Evers & Clopton, 2006, Walberg, 2006).

In equally problematic analysis, Neymotin (2010) set out to show that massive court ordered infusions of funding in Kansas following Montoy v. Kansas led to no substantive improvements in student outcomes. However, Neymotin evaluated changes in school funding from 1997 to 2006, but the first additional funding infused following the January 2005 Supreme Court decision occurred in the 2005-06 school year, the end point of Neymotin’s outcome data.

Baker, B. D., & Welner, K. G. (2011). School finance and courts: Does reform matter, and how can we tell. Teachers College Record, 113(11), 2374-2414.

Hanushek, E. A., and Lindseth, A. (2009). Schoolhouses, Courthouses and Statehouses. Princeton, N.J.: Princeton University Press., See also: http://edpro.stanford.edu/Hanushek/admin/pages/files/uploads/06_EduO_Hanushek_g.pdf

Hanushek, E. A. (ed.). (2006). Courting failure: How school finance lawsuits exploit judges’ good intentions and harm our children (No. 551). Hoover Press.

Evers, W. M., and Clopton, P. (2006). “High-Spending, Low-Performing School Districts,” in Courting Failure: How School Finance Lawsuits Exploit Judges’ Good Intentions and Harm our Children (Eric A. Hanushek, ed.) (pp. 103-194). Palo Alto, CA: Hoover Press.

Walberg, H. (2006) High Poverty, High Performance Schools, Districts and States. in Courting Failure: How School Finance Lawsuits Exploit Judges’ Good Intentions and Harm our Children (Eric A. Hanushek, ed.) (pp. 79-102). Palo Alto, CA: Hoover Press.

Hanushek, E. A., and Lindseth, A. (2009). Schoolhouses, Courthouses and Statehouses. Princeton, N.J.: Princeton University Press., See also: http://edpro.stanford.edu/Hanushek/admin/pages/files/uploads/06_EduO_Hanushek_g.pdf

Greene and Trivitt (2008) present a study in which they claim to show that court ordered school finance reforms let to no substantive improvements in student outcomes. However, the authors test only whether the presence of a court order is associated with changes in outcomes, and never once measure whether substantive school finance reforms followed the court order, but still express the conclusion that court order funding increases had no effect.

Greene, J. P. & Trivitt, (2008). Can Judges Improve Academic Achievement? Peabody Journal of Education, 83(2), 224-237.

Neymotin, F. (2010) The Relationship between School Funding and Student Achievement in Kansas Public Schools. Journal of Education Finance 36 (1) 88-108.

[xiii] Richard J. Murnane and Randall Olsen (1989) The effects of salaries and opportunity costs on length of state in teaching. Evidence from Michigan. Review of Economics and Statistics 71 (2) 347-352

[xiv] David N. Figlio (2002) Can Public Schools Buy Better-Qualified Teachers?” Industrial and Labor Relations Review 55, 686-699. David N. Figlio (1997) Teacher Salaries and Teacher Quality. Economics Letters 55 267-271. Ronald Ferguson (1991) Paying for Public Education: New Evidence on How and Why Money Matters. Harvard Journal on Legislation. 28 (2) 465-498.

[xv] Loeb, S., Page, M. (2000) Examining the Link Between Teacher Wages and Student Outcomes: The Importance of Alternative Labor Market Opportunities and Non-Pecuniary Variation. Review of Economics and Statistics 82 (3) 393-408

[xvi] Figlio, D.N., Rueben, K. (2001) Tax Limits and the Qualifications of New Teachers. Journal of Public Economics. April, 49-71

See also:

Downes, T. A. Figlio, D. N. (1999) Do Tax and Expenditure Limits Provide a Free Lunch? Evidence on the Link Between Limits and Public Sector Service Quality52 (1) 113-128

[xvii] Ondrich, J., Pas, E., Yinger, J. (2008) The Determinants of Teacher Attrition in Upstate New York. Public Finance Review 36 (1) 112-144

[xviii] See http://www2.ed.gov/rschstat/research/pubs/rigorousevid/rigorousevid.pdf;

Jeremy D. Finn and Charles M. Achilles, “Tennessee’s Class Size Study: Findings, Implications, Misconceptions,” Educational Evaluation and Policy Analysis, 21, no. 2 (Summer 2009): 97-109;

Jeremy Finn et. al, “The Enduring Effects of Small Classes,” Teachers College Record, 103, no. 2, (April 2001): 145–183; http://www.tcrecord.org/pdf/10725.pdf;

Alan Krueger, “Would Smaller Class Sizes Help Close the Black-White Achievement Gap.” Working Paper #451 (Princeton, NJ: Industrial Relations Section, Department of Economics, Princeton University, 2001) http://www.irs.princeton.edu/pubs/working_papers.html;

Henry M. Levin, “The Public Returns to Public Educational Investments in African American Males,” Dijon Conference, University of Bourgogne, France. May 2006. http://www.u-bourgogne.fr/colloque-iredu/posterscom/communications/LEVIN.pdf;

Spyros Konstantopoulos Spyros and Vicki Chun, “What Are the Long-Term Effects of Small Classes on the Achievement Gap? Evidence from the Lasting Benefits Study,” American Journal of Education 116, no. 1 (November 2009): 125-154.

[xix] Jepsen, C., Rivkin, S. (2002) What is the Tradeoff Between Smaller Classes and Teacher Quality? NBER Working Paper # 9205, Cambridge, MA. http://www.nber.org/papers/w9205

“The results show that, all else equal, smaller classes raise third-grade mathematics and reading achievement, particularly for lower-income students. However, the expansion of the teaching force required to staff the additional classrooms appears to have led to a deterioration in average teacher quality in schools serving a predominantly black student body. This deterioration partially or, in some cases, fully offset the benefits of smaller classes, demonstrating the importance of considering all implications of any policy change.” p. 1

For further discussion of the complexities of evaluating class size reduction in a dynamic policy context, see:

David Sims, “A Strategic Response to Class Size Reduction: Combination Classes and Student Achievement in California,” Journal of Policy Analysis and Management, 27(3) (2008): 457–478

David Sims, “Crowding Peter to Educate Paul: Lessons from a Class Size Reduction Externality,” Economics of Education Review, 28 (2009): 465–473.

Matthew M. Chingos, “The Impact of a Universal Class-Size Reduction Policy: Evidence from Florida’s Statewide Mandate,” Program on Education Policy and Governance Working Paper 10-03 (2010).

[xx] Ehrenberg, R.G., Brewer, D., Gamoran, A., Willms, J.D. (2001) Class Size and Student Achievement. Psychological Science in the Public Interest 2 (1) 1-30

[xxi] Baker, B., & Welner, K. G. (2012). Evidence and rigor scrutinizing the rhetorical embrace of evidence-based decision making. Educational Researcher, 41(3), 98-101.

[xxii] Loeb, S., Darling-Hammond, L., & Luczak, J. (2005). How teaching conditions predict teacher turnover in California schools. Peabody Journal of Education, 80(3), 44-70.

Isenberg, E. P. (2010). The Effect of Class Size on Teacher Attrition: Evidence from Class Size Reduction Policies in New York State. US Census Bureau Center for Economic Studies Paper No. CES-WP-10-05.