It’s been a while since I’ve taken the time to write about New Jersey school finance. It has apparently been too long. I’ve written much about New York school finance and Kansas school finance. And the parallels are straightforward.

In Kansas, around 2006, the state’s high court issued an order in the case of Montoy v. Kansas that the legislature remedy both inequities and inadequacies in the funding of the state’s school system. The legislature adopted modifications to their school funding formula that would be phased in from 2007 forward. The high court accepted that remedy and dismissed oversight of the Montoy case. As the economy tanked around 2009, the state began cutting and cutting more, never coming close to the original promises of the 2007 formula. Because Montoy had been dismissed, a new case was filed (Gannon v. Kansas) resulting in a new court order to adequately and equitably fund schools – the battle over that court order is ongoing. All and all, I would assert that while one can hardly declare these cases a smashing success, the equity and adequacy funding in Kansas is likely better than it would have been in the absence of judicial pressure on the legislature (empirical research backs this up as a general rule). I have written a few recent briefs on this topic:

- The Efficiency Smokescreen, “Cuts Cause no Harm” Argument & The 3 Kansas Judges who Saw Right Through It!

- Unconstitutional by any other name is still Unconstitutional

In New York, around 2006, the state’s high court issued an order in the case of CFE vs. New York. The legislature responded by adopting a foundation aid formula that would establish for each district an “adequacy target” and then through a combination of required local effort, and state aid, district revenues would be raised to that target. This too was to be phased in over time. The court graciously accepted the state’s offering. But the state never even came close, leaving some very high need districts with per pupil aid shortfalls over $5,000. Districts experiencing some of the most egregious funding shortfalls (including some that make my most disadvantaged districts lists) included places like Utica and Poughkeepsie. These districts brought a new lawsuit against the state which was heard a little over a year ago in Albany. And still they wait, but with the possibility that judicial pressure will lead to at least some improvements. I have written a few posts on this topic, including:

- On how New York State crafted a low-ball estimate of what districts needed to achieve adequate outcomes and then still completely failed to fund it.

- Angry Andy’s Failing Schools & the Finger of Blame

- Angry Andy’s not so generous state aid deal: A look at the 2015-16 Aid Runs in NY

Which brings us to New Jersey! New Jersey had been under judicial oversight for an extended period in the Abbott v. Burke series of cases pertaining to school funding equity and adequacy. The most aggressive of these orders came in 1998 and focused specifically on the programs and services that must be made available to children in the Abbott plaintiff districts (mainly high minority/poverty concentration, relatively large (not all) urban districts). The state largely complied… for a period of time… with this order. Tiring of court oversight and seeking a path forward to a statewide school funding solution, the legislature passed the 2008 School Funding Reform Act (SFRA). I discussed elements of this formula in a 2011 post. The formula, much like remedies proposed in Kansas and New York in 2007, was intended to be phased in over the next few years, leveling up districts whose present aid levels were lower than needed to meet the state’s new calculated “adequacy targets” and, in some cases, phasing out aid that put districts above their “adequacy targets.” The court accepted this formula as a legitimate attempt to meet the demands of Abbott and reason to phase out oversight. Though in 2011, the court did put its foot down when promises were broken. I address this in a 2011 post.

Even before this time – actually partly because SFRA was less “progressive” than prior funding – the overall progressiveness of New Jersey’s school funding system had begun to erode.

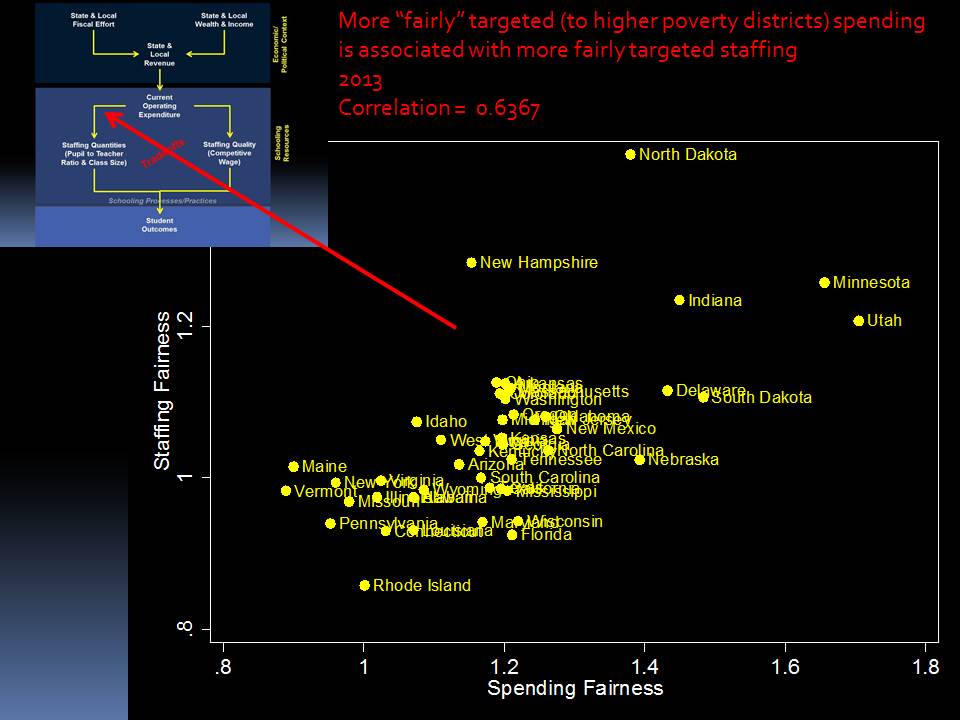

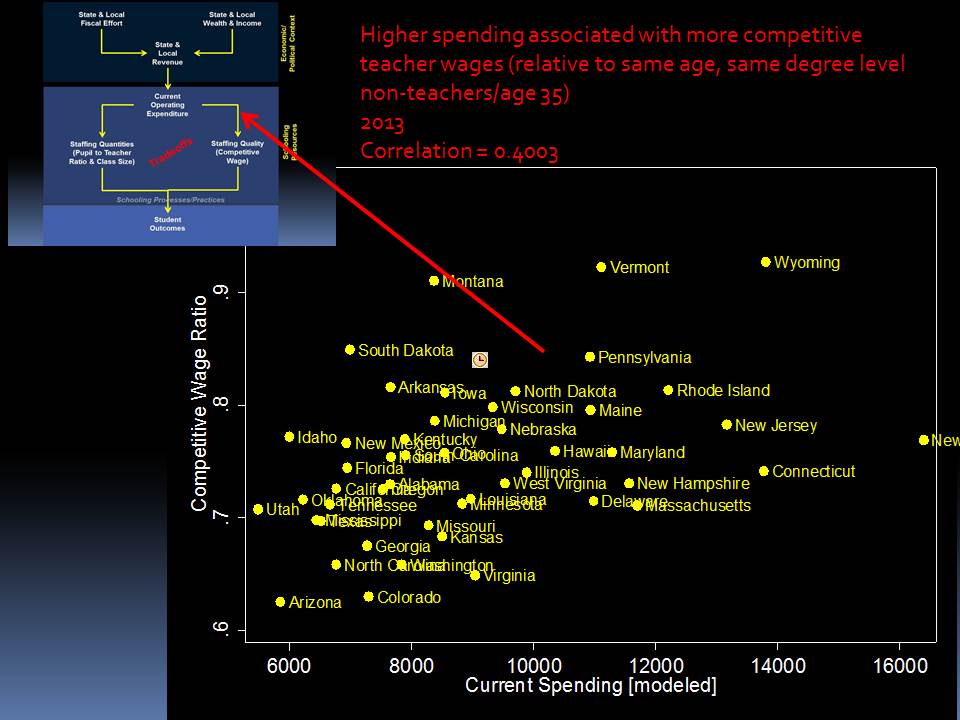

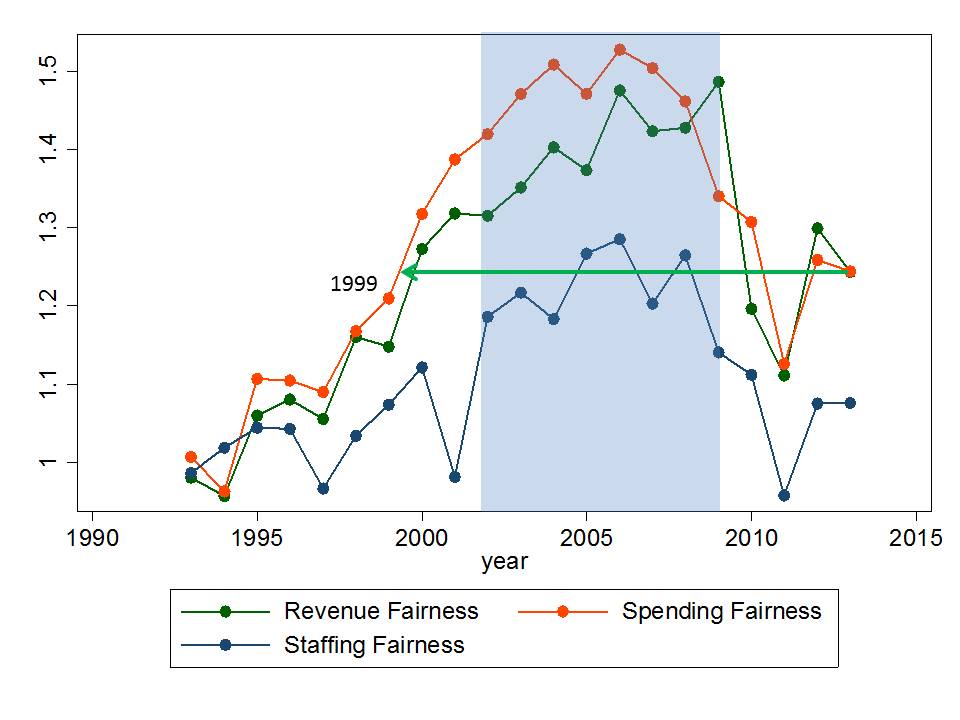

Figure 1 shows us a tracking of the progressiveness of a) state and local revenue, b) current spending per pupil and c) staffing ratios per 100 pupils, where a ratio of 1.2 indicates that the very high poverty school district would have 20% more resources than the very low poverty district. An index of 1.0 would indicate parity – which is not the same as equity.

In New Jersey, funding and staffing progressiveness did scale up after the 1998 Abbott ruling. It reached a peak in the mid-2000s, and subsequently (prior to and during implementation of SFRA, under the previous administration) began its steep decline).

Figure 1 – Progressiveness of NJ Resources over Time

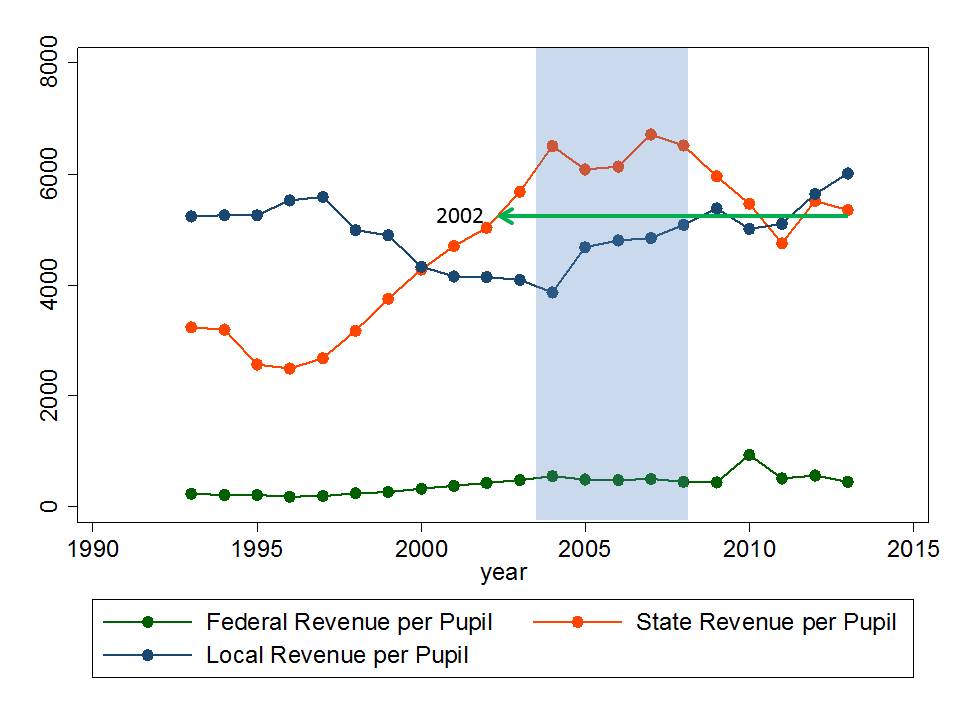

Figure 2 shows that during this period, state aid to the “average” school district declined substantially and local contributions increased.

Figure 2 – State, Local and Federal Revenues over Time

Which brings us to the here and now, and parallels between New Jersey, Kansas and New York.

All three states adopted school funding formulas that by their design identify an “adequate” level of spending for each local public school district based on students served, location, etc. These formulas, while imperfect, and often lowball, politically manipulated estimates of actual needs and costs (New York version, New Jersey version) are in a sense, each state’s own declaration of what they consider to be constitutionally adequate funding for each district. After all, that’s how each state got their formula through their high court!

So then, as I’ve done in numerous posts on New York state school finance, let’s accept these foundation aid formula calculations as a low bar – a conservative estimate – the STATE’S OWN ADMITTED CONSTITUTIONAL ADEQUACY TARGET.

In my analyses of New York school funding, I look at two types of funding gaps – the gap in state aid provided versus state aid actually received toward reaching district adequacy targets, and the gap in (relevant components of) per pupil spending when compared to each district’s adequate spending target. This latter gap is most relevant from the standpoint of framing a constitutional challenge, but to the extent that it is the former which causes the latter, those stat aid gaps matter too. Here, let’s take a look at gaps between spending and adequacy targets.

Figure 3 compares district per pupil operating expenditure (Comparative Spending Guide Indicator 1) to per pupil adequacy targets (not including transportation and security). So, I’m actually giving the state a small break here. But that won’t help them much. Districts are sorted from lowest to highest shares of low income children served.

As we can see, among districts with very low shares of low income children, many spend well above what they would need to be merely adequate. And I assure you, few individuals in those communities a looking to post banners over their schoolhouse door that say “Providing a Constitutionally Adequate Education for Our Kids.” As we move toward the right hand side of the figure, many large high poverty urban districts fall one to a few thousand dollars per pupil below their adequacy target. But more disturbingly, there are several smaller, but not tiny, high poverty districts that appear to be more than $5,000 per pupil below their adequacy target!

Figure 3 – District Spending vs. Adequacy Targets (SFRA Calculations) for 2014-15

Figure 4 recasts Figure 3 in terms of adequacy gaps (differences between what each district should be able to spend and what it does spend, per pupil). As low income shares increase, adequacy gaps increase. Districts with an orange dot indicator have adequacy gaps exceeding $5,000 per pupil. Where adequacy targets are around $15,000 per pupil, that means a 30% gap!

Figure 4 – District Adequacy Gaps relative to SFRA Calculated Adequacy Targets 2014-15

That’s not a trivial gap. It’s huge. And one might argue, quite likely unconstitutional – when measured against the state’s own supposed constitutionally adequate target (that is, without deliberating even whether SFRA itself would provide adequate funding if fully funded!)

And so I find myself on this April fool’s day of 216 looking for Costello. It seems only appropriate that in my future writings on New Jersey school finance that I have opportunity to chronicle New Jersey school finance litigation from Abbott to Costello.

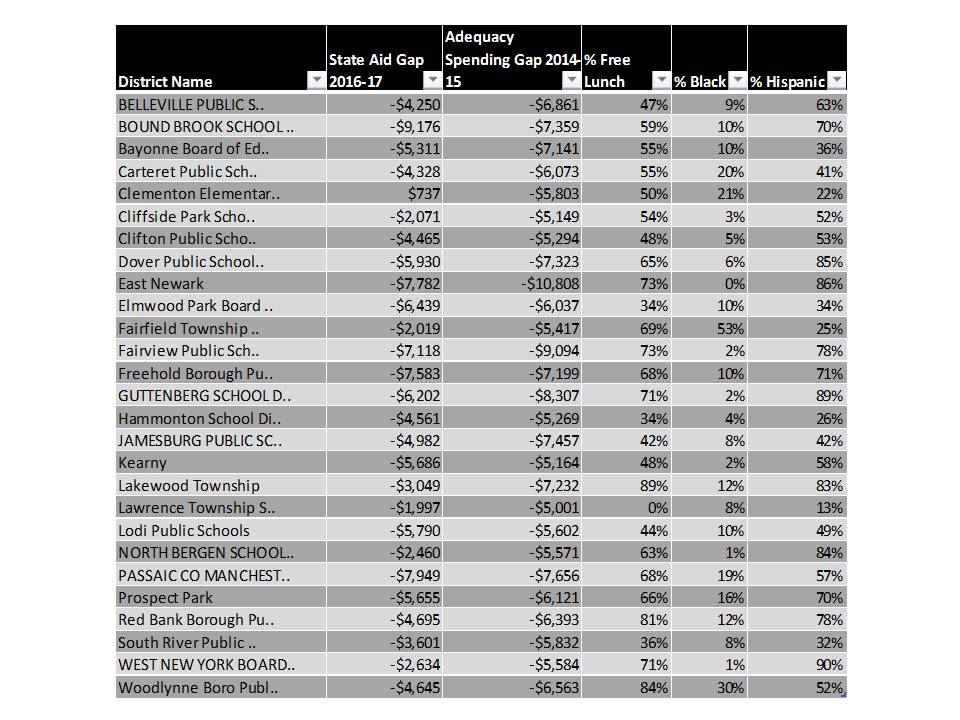

And where might we find Costello? Well, here are the districts (first cut at these data – still vetting) with those huge spending/adequacy gaps:

Figure 5 – New Jersey districts with excessively large adequacy gaps

To be clear, many New Jersey districts have large and very large adequacy gaps, above and beyond these. These are just the most egregious. And thus the most logical choice subset to bring the next round of litigation (at least by first cut at the data).

As it turns out, this traditionally Irish or Italian (according to Wikipedia) surname Costello may not be the easiest to find in our potential plaintiff districts.

It turns out that the strongest demographic correlate of school district adequacy gaps is a district’s share of students who are Hispanic. That is, there seems to be an embedded racial/ethnic bias in the funding gaps faced by New Jersey school districts.

Figure 6 – Demographic Correlates of Adequacy Gaps

These districts, in my estimation, have a pretty strong case to be filed regarding the inadequacy and related inequity of their funding, and the potential harmful impacts on their students. More on those harmful impacts in a future post or full length report!